relevant supporting evidence

summaryThe research on students’ abilities to use evidence when writing scientific arguments suggests that students usually try to use data as evidence (Sandoval & Millwood, 2005), but routinely use inappropriate evidence that is either irrelevant or non-supporting (L. Kuhn & Reiser, 2005; McNeill & Krajcik, 2007; Sandoval, 2003). This is noteworthy because relevancy and support impact the quality of scientific evidence, and, therefore, the quality of the argument as a whole (NRC, 2012). We define relevant evidence as measurements or observations that addresses (or fits with) the science topic. Relevant data has the potential to be of high quality if it is also supportive of the claim. Therefore, supporting evidence can be defined as evidence that exemplifies the relationship established in the claim. For instance, if a claim were based on a trend in the data (e.g. earthquake are stronger when their focus is closer to the Earth’s surface), relevant evidence would address the science topic (e.g., depth can impact the strength of an earthquake) and supporting evidence would exemplify the relationship (e.g. Earthquake’s A and B were shallow and were also stronger than the other earthquakes). The goal, therefore, is for students to recognize that the quality of scientific evidence is dependent on both relevance and support.

|

definitions

|

Construct map

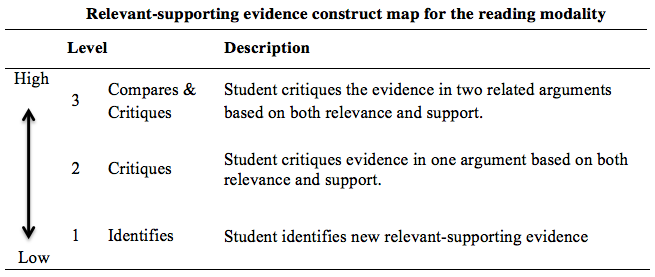

A construct is a characteristic of an argument. Construct maps use research on student learning as well as expert knowledge to separate the construct into distinct levels that characterize students' progression towards greater expertise (Wilson, 2005). The reading relevant-supporting evidence construct map (see below) has three levels: 1) identifies, 2) critiques, and 3) compares & critiques.

assessments

We developed items that correspond to the identifies, critiques, and compares & critiques levels of the reading relevant-supporting evidence construct map. While the first two items are multiple-choice, the third item is constructed response. Moreover, each item targets the ability associated with a single construct level. For instance, if a student answers the first two items correctly, then his ability corresponds to at least the "critiques" level.

Each set of three items uses the same introductory information, which consists of a wonderment statement followed by a sample student's argument. Because the three items use the same introductory information, albeit in different ways, we refer to the set of three items as a testlet. We developed four different testlets. While the topic of two of the testlets focuses on earthquakes, the other two testlets focus on volcanoes.

Each set of three items uses the same introductory information, which consists of a wonderment statement followed by a sample student's argument. Because the three items use the same introductory information, albeit in different ways, we refer to the set of three items as a testlet. We developed four different testlets. While the topic of two of the testlets focuses on earthquakes, the other two testlets focus on volcanoes.

rubrics and answer key

The items at the identifies and critiques levels are multiple-choice, whereas the compares & critiques items are constructed response. Therefore, we developed a rubric to grade/score the constructed response item within each of the testlets. Each rubric includes sample student responses for each level. The document below provides the answers to the multiple choice items as well as specific rubrics for the constructed response item within each testlet.

teaching strategies

It is our hope that, over time, students abilities will move towards the compares & critiques level of the construct map. To assist teachers with this goal, we have developed teaching strategies that correspond to each level of the construct map.

Construct Level

3: Compare and Critique

2: Critique 1: Identify 0: Does Not Identify |

Description of Teaching Strategies

|

Resources

|

Tech reports

The tech report provides the psychometric analyses from pilot studies with middle school students.

references

Kuhn, L., & Reiser, B. (2005). Students constructing and defending evidence-based scientific explanations. Paper presented at the annual meeting of the National Association for Research in Science Teaching, Dallas, TX.

McNeill, K. L., & Krajcik, J. (2007). Middle school students’ use of appropriate and inappropriate evidence in writing scientific explanations. In M. Lovett & P. Shah (Eds.), Thinking with data: The proceedings of the 33rd Carnegie symposium on cognition. Mahwah, NJ: Lawrence Erlbaum Associates, Inc.

Osborne, J., Erduran, S., & Simon, S. (2004). Enhancing the quality of argumentation in school science. Journal of Research in Science Teaching, 41(10), 994-1020.

Ratcliffe, M. (1999). Evaluation of Abilities in Interpreting Media Reports of Scientific Research. International Journal of Science Education, 21(10), 1085-1099.

Sandoval, W. A. (2003). Conceptual and epistemic aspects of students’ scientific explanations. Journal of the Learning Sciences, 12, 5-51.

Sandoval, W. A., & Millwood, K. A. (2005). The quality of students’ use of evidence in written scientific explanations. Cognition and Instruction, 23(1), 23-55.

National Research Council (2012). A framework for K-12 science education: Practices, Crosscutting Concepts, and core ideas. Washington, DC: National Academy of Sciences.

Norris, S. P., & Phillips, L. M. (1994). Interpreting pragmatic meaning when reading popular reports of science. Journal of Research in Science Teaching, 31(9), 947-967.

Phillips, L. M., & Norris, S. P. (1999). Interpreting popular reports of science: What happens when the reader’s world meets the world on paper? International Journal of Science Education, 21(3), 317-327.

McNeill, K. L., & Krajcik, J. (2007). Middle school students’ use of appropriate and inappropriate evidence in writing scientific explanations. In M. Lovett & P. Shah (Eds.), Thinking with data: The proceedings of the 33rd Carnegie symposium on cognition. Mahwah, NJ: Lawrence Erlbaum Associates, Inc.

Osborne, J., Erduran, S., & Simon, S. (2004). Enhancing the quality of argumentation in school science. Journal of Research in Science Teaching, 41(10), 994-1020.

Ratcliffe, M. (1999). Evaluation of Abilities in Interpreting Media Reports of Scientific Research. International Journal of Science Education, 21(10), 1085-1099.

Sandoval, W. A. (2003). Conceptual and epistemic aspects of students’ scientific explanations. Journal of the Learning Sciences, 12, 5-51.

Sandoval, W. A., & Millwood, K. A. (2005). The quality of students’ use of evidence in written scientific explanations. Cognition and Instruction, 23(1), 23-55.

National Research Council (2012). A framework for K-12 science education: Practices, Crosscutting Concepts, and core ideas. Washington, DC: National Academy of Sciences.

Norris, S. P., & Phillips, L. M. (1994). Interpreting pragmatic meaning when reading popular reports of science. Journal of Research in Science Teaching, 31(9), 947-967.

Phillips, L. M., & Norris, S. P. (1999). Interpreting popular reports of science: What happens when the reader’s world meets the world on paper? International Journal of Science Education, 21(3), 317-327.